Spectral Deep Learning

Understanding Deep Neural Networks through the lens of Spectral Theory of Graph and Dynamical Systems.

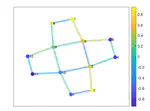

My research in deep learning addresses a seemingly simple yet profoundly impactful question: Can a neural network be envisioned as a graph with neurons as nodes and connections as links? More specifically, is it feasible to spectrally analyze the adjacency matrix of this graph? The affirmative answer to this question has profound implications, allowing the connections within the network to be described through the eigenvectors and eigenvalues of the graph’s adjacency matrix. This spectral perspective has facilitated two significant advancements:

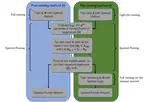

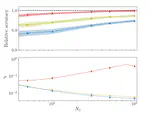

- Eigenvalue Inspection: By examining the eigenvalues before and/or after training, we gain insights into which nodes (neurons) play crucial roles in the network’s functionality. This understanding has been pivotal in developing Structural Pruning techniques, which enhance the network’s efficiency and performance by selectively removing less significant nodes.

- Dynamic Learning Process Reformulation: This approach has led to the creation of advanced recurrent networks. These networks are not static; they learn and adapt by dynamically modifying the attractors’ basins of attraction based on predefined criteria. This adaptive quality allows the networks to learn functions or classes more effectively, showcasing a remarkable flexibility in their learning capability.

I also have some applied works and more theoretical ones going on, follow me for new papers on the subject!

Related Publications

.js-id-deep-learning